What if a fashion brand creates 100% sustainable clothes but outsources most of its production to sweatshops with a reputation for environmental degradation? Consumers frequently face such deceptive practices and misleading eco-friendly claims, which ultimately erode their confidence in brands and products. Now suppose this practice is done in the context of artificial intelligence (AI), wherein companies claim that their AI initiatives align with ESG ‘Environmental, Social, and Governance’ principles. To say the least, while it has the potential to change life for the better by offering fixes to the many crises facing modern civilization, it might also be the latest concept to greenwash in ‘AI washing’. In most cases, there is no concrete basis for organizations to claim that their AI systems are ethical or responsible, yet they still market them as such.

Well, the point of convergence here is where the sociology of engineering meets and what we can refer to as responsible AI and the ESG paradigm. So, organizations have to move from just talking about action to performing action that brings together AI development and ESG groundwork. This blog post is going to take us through what responsible AI is, and what ESG principles mean, some of the finer points around making the link, and why this is really a sine qua non of the AI revolution.

Understanding Responsible AI and ESG Principles: A Shared Mandate

AI is a broad concept that includes the design, development, deployment, and supervision of sentient machines. What responsible AI does is that it focuses on the fairness, accountability, and ethical use of AI systems. What’s more, the ESG standards represent the requirements that are used to keep track of an organization’s sustainability performance related to ecological, social, and governance issues, wherein the latter allows companies to establish responsible and ethical leadership.

When starting out, these two fields may seem different, but they have one significant mutual objective that is to improve the community in the long run and reduce the negative impact. It is very crucial to achieve the symbiosis of AI and ESG principles in such a way by designing AI technologies that are socially responsible and will not only serve for business practices but also be a means of civilization and environmental progress. There are some of the most crucial similarities:

- Environment: The AI computing models are very powerful and therefore require a high amount of computational power, which in turn has an energy cost to some extent. The resolution of this problem is part of the ‘E’ in ESG by stimulating the power generation industry to incorporate green AI.

- Social: The adoption of AI in various sectors like recruitment, health care, and law enforcement goes a long way in determining the probability of successful decision-making. As a result, the responsible AI concept is all about the inclusion regimen meant to expel bias and discrimination, and therefore it encompasses the ‘S’ of ESG.

- Governance: The principles of algorithmic transparency and clear accountability are those that are aligned with the ‘G’ to make sure that the organization is in compliance with the rules and codes of ethics.

Mitigating AI’s Environmental Impact: Optimizing Energy Efficiency

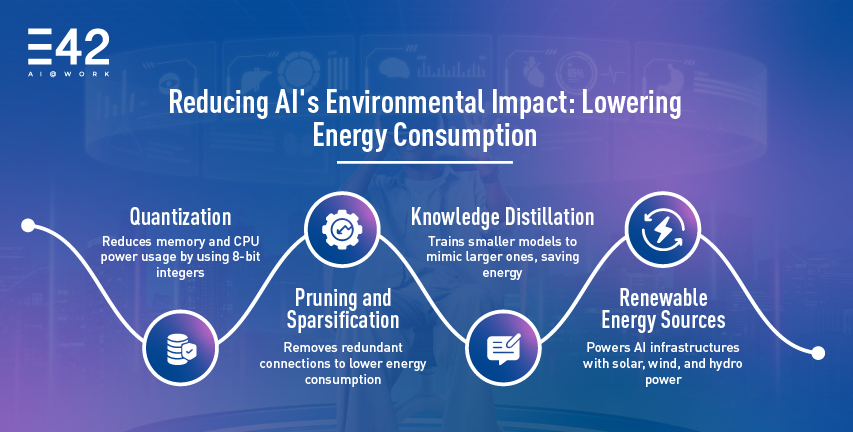

The carbon footprint AI leaves is one of the major environmental issues associated with it. The process of training a single language model can produce almost the equivalent of the people emissions of cars over their lifespan. It is therefore necessary to design and operate these equipment with energy efficiency in mind. Here are some strategies to reduce AI’s environmental footprint:

- Quantization: A course of action that decreases the number of bits that need to be stored to record the model’s weights in a memory disk. Lower precision formats such as 8-bit integers, may significantly lower memory and CPU power consumption, which otherwise might have been the case if 32-bit floating-point precision were used. Software frameworks like TensorFlow Lite and PyTorch make the implementation of these cost-saving practices a breeze.

- Pruning and Sparsification: These techniques are only useful when they eliminate those links, or weights, which are either of less importance or even useless to the functioning of neural networks. Pruning is the process of removing less important connections, while sparsification converts weights into zeros, creating a more efficient model. By reducing the number of connections, these AI technologies are designed to be socially responsible, speeding up processing and decreasing energy use.

- Knowledge Distillation: This method is to train an easy-to-compute small language model (SLM) to exhibit the behavior of a more complex large language model (LLM) which has more parameters. The SLM captures the most important knowledge extracted from the LLM; in other words, through a simple and small model, power is saved. Smaller and faster models can, thus, be achieved that consume less power.

- Hardware Optimization: AI-driven graphics-enhancing hardware such as GPUs, TPUs, and FPGAs were designed to carry out the matrix operations that constitute the driving force behind many AI algorithms. These apparatuses are typically way faster than their standard CPU counterparts in their speed and are also more energy-efficient, making them the better choice for AI also comes with the drawback of AI workloads.

- Renewable Energy Sources: For those data centers and AI infrastructures which are transforming to the use of renewable energy sources namely, solar, wind, and hydro power, the outcome shall be a noticeable decline in the carbon dioxide emissions from AI operations. Even more so, many cloud providers are incorporating renewable energy as the power source of their facilities, thus enhancing the realization of sustainable AI development.

Mitigating AI Bias: Strategies to Ensure Fairness in AI Outcomes

Why is it that AI models could be biased? In some cases, AI systems that are taught based on datasets with biases may burden, and then, worse still, deepen the existing social inequalities. In other words, an AI adoptee by a recruiter might inadvertently be inclined to choose the home team, thus increasing the number of unfair outcomes. To mitigate these concerns, here are a few tactics:

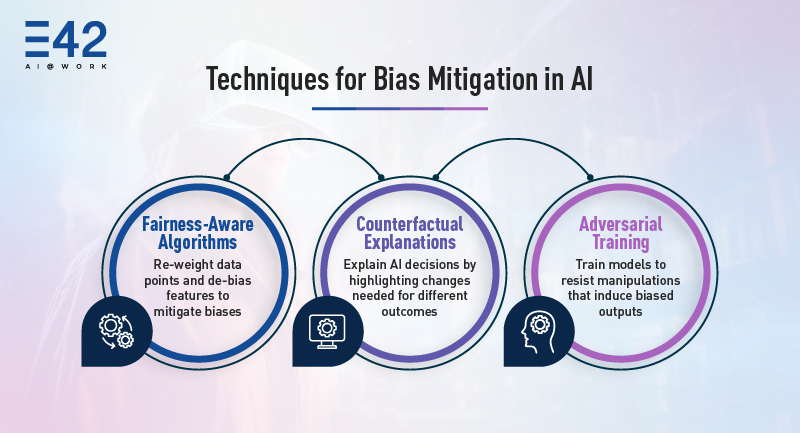

- Fairness-Aware Algorithms: These methods not only essentially mean the data are refigured but also the dataset is adjusted in such a way that biases are reduced and it aligns with the ESG principles The methods include readjusting data points or ‘re-weighting’ them to increase the scale of the minority groups, which would lead to a balanced representation of each group or discarding and then de-biasing the calendar so that it is only on the same scale as the sensitive attributes e.g., race, or gender. These steps boost more equitable training schedules.

- Counterfactual Explanations: This approach entails giving explanations to AI decisions that are easily comprehensible to the human analyst, and thus, it becomes clear how small changes in input can cause different outcomes. By highlighting the factors that influence decisions, users can better understand whether biases are present and take steps to address them.

- Adversarial Training: In this process, AI machines are taught to stand firm when attacked with adversarial strikes, which refers to a modification of the input data that can lead to unjust or biased results. Through enhancing security, models become less vulnerable to data corruption, misrepresentation in resolutions, as well as hidden biases.

Enhancing AI Transparency: Overcoming Governance Failures

Black-box AI models, where the decision-making process is opaque, pose significant challenges. To address this:

- Explainable AI (XAI) Techniques: Methods like feature importance, rule extraction, and LIME can help to make AI models more interpretable by providing insights into the reasoning behind their decisions.

- Model Cards: Standardized documentation for AI models, including information on intended use cases, limitations, potential biases, training data, and performance metrics, can help users understand the capabilities and limitations of AI models and make informed decisions.

By implementing these mitigation strategies, we can strive to develop and deploy AI systems that are not only powerful but also responsible, ethical, and sustainable.

Conclusion

In an era where technology is reshaping every facet of life, responsible AI aligned with ESG principles is not just a moral imperative but a strategic necessity. The risks of AI washing are too great to ignore, from environmental degradation to social inequities and governance failures. By embedding ESG considerations into AI development, organizations can unlock long-term value, foster trust, and contribute to a sustainable future.

As stakeholders increasingly scrutinize AI’s societal impact, those who prioritize responsibility over rhetoric will lead the way. It’s time to move beyond the hype and ensure that AI genuinely serves humanity’s collective good.

To implement responsible AI in your enterprise and align your AI initiatives with ESG principles for sustainable success, visit E42.